Solytics Partners’ Unified AI Governance & Assurance Ecosystem for Healthcare

A unified, healthcare-focused framework to manage AI/ML and GenAI across the full lifecycle: inventory, validation, monitoring, observability, governance, documentation, and clinical workflows.

Get a 360° view of every healthcare AI system with centralized evidence, lineage, approvals, and runtime oversight – built for patient safety and clinical accountability.

Built-in workflows aligned to FDA, HIPAA, EU AI Act, and Health Canada expectations. Define policy once, enforce everywhere with automated validation, monitoring, approvals, and audit-ready evidence, enterprise speed.

The Healthcare AI Imperative and Challenges

Healthcare is scaling GenAI/LLMs to enhance clinical decision support, diagnostics, research acceleration, and patient engagement – but success requires governance designed for patient safety and multi-regulator scrutiny.

1. Fragmented operating model

2. Healthcare-specific GenAI risk

3. Assurance gaps from validation to patient care

4. Regulatory and stakeholder pressure

An Integrated Platform for the Healthcare AI Lifecycle

A connected, clinically-governed approach to manage AI – from research to patient care – combining visibility, control, and assurance across every stage.

Inventory & Workflow Management

AI Governance & Policy-as-Code

Model Wrapping (Runtime Enforcement)

ML & GenAI

Validation

LLM and RAG Evaluation

LLM and GenAI Observability

Continous Monitoring

Documentation, Evidence & Incident Management

Our Healthcare AI Governance Capabilities & Differentiators

A unified ecosystem that embeds healthcare policy into workflows, enforces runtime controls, and continuously monitors safety and performance – so healthcare AI stays transparent, compliant, and deployment-ready.

Governance framework and lifecycle orchestration

Policy-embedded lifecycle gates from intake to retirement.

Control library + Policy-as-Code with Model Wrapping enforcement.

Provenance, lineage, and full version history with impact analysis.

Structured exception and change governance with rollback controls

Patient safety, risk, compliance, and monitoring

Bias/fairness surveillance across cohorts with alerts and mitigations.

Performance and drift monitoring for real-world clinical settings.

LLM/GenAI observability (hallucinations, grounding, safety, PHI checks).

Progressive rollouts (shadow/canary/blue-green) with safety-driven triggers.

Transparency and explainability

Human-readable rationales and clinical attributions

Auto-generated model cards/fact sheets with limitations and safeguards

Role-based stakeholder views (clinical, risk, compliance, leadership)

Evidence, reporting, and collaboration

Audit-ready exports, assessments, and regulator-aligned reports.

Central evidence repository for approvals, validations, incidents, corrective actions.

Tasking, SLAs, and dashboards for clinical governance operations.

Healthcare Business Benefits & Outcomes

Accelerated Time-to-Clinical Value

Stronger Patient Safety Controls

Reduced Compliance Effort

Audit-Ready Evidence On Demand

Better Clinical Trust and Adoption

Adaptable to Changing Mandates

Why AI Governance Matters

Financial institutions face increasing regulatory scrutiny, operational and reputational risk when deploying AI. Making comprehensive and multidisciplinary governance is essential to ensure transparency, accountability, and trust in AI use-case adoption.

US & Canada

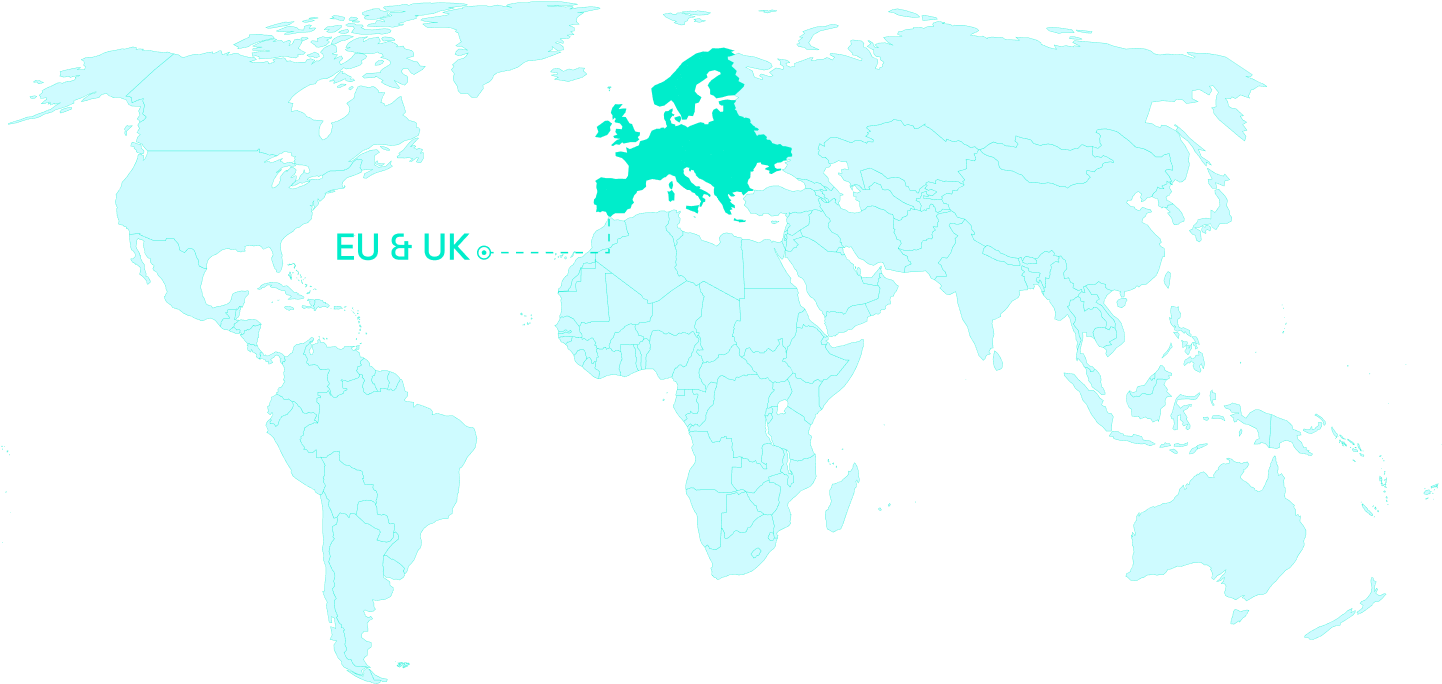

EU & UK

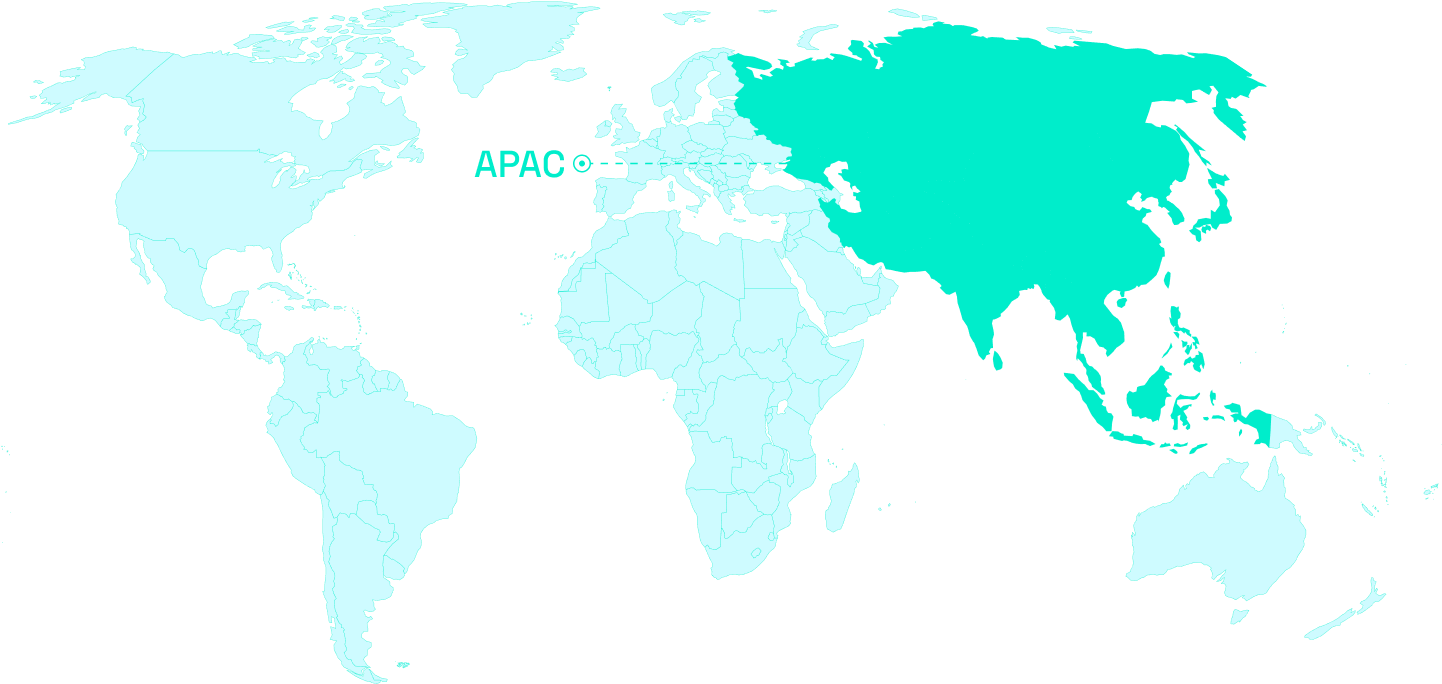

APAC

United States

- FDA AI/ML Medical Device Regulation (USA): Establishes comprehensive premarket pathway through 510(k), De Novo classification, or Premarket Approval (PMA) for AI-enabled medical devices, with emphasis on predetermined change control plans and post-market surveillance.

- HIPAA/HITECH Act (USA): Mandatory protection of Protected Health Information (PHI) in AI systems with enhanced enforcement mechanisms and penalties up to $1.5M annually per violation category.

- FDA Draft Guidance on AI-Enabled Device Software Functions (January 2025): First comprehensive lifecycle management guidance covering development through post-market surveillance for medical AI systems.

Canada

- Health Canada Medical Devices Regulations: Class II, III, and IV Machine Learning-enabled Medical Devices (MLMD) requiring comprehensive regulatory approval with predetermined change control plans.

- PIPEDA (Personal Information Protection and Electronic Documents Act): Governs personal health data processing in AI systems with enhanced requirements for medical information protection.

- Pan-Canadian AI for Health (AI4H) Guiding Principles (January 2025): Federal-provincial-territorial framework for responsible AI adoption in healthcare settings.

European Union

- EU AI Act (EU): Medical AI systems automatically classified as high-risk under Article 6(1)(b), requiring comprehensive risk management, transparency, human oversight, and post-market monitoring with implementation by August 2026-2027.

- Medical Device Regulation (MDR) & IVDR (EU): Intersection of existing medical device compliance with new AI Act requirements for comprehensive dual regulatory oversight.

- GDPR (EU): Special category health data processing requirements with enhanced safeguards for AI-driven medical decisions and automated decision-making restrictions.

United Kingdom

- MHRA AI Strategy (UK): Software as Medical Device (SaMD) and AI as Medical Device (AIaMD) regulatory pathway with emphasis on safety, transparency, fairness, accountability, and contestability principles.

- MHRA Transparency Guiding Principles for MLMDs (June 2024): Human-centered design requirements, performance monitoring, and risk communication for healthcare AI systems.

Asia-Pacific

- TGA Software as Medical Device (Australia): Comprehensive regulatory framework for AI-enabled medical devices with risk-based classification and post-market surveillance requirements.

- PMDA AI Guidelines (Japan): Regulatory guidance for AI/ML-based medical devices with emphasis on clinical validation and real-world performance monitoring.

- Singapore PDPA Healthcare AI: Personal data protection framework for AI systems processing health data with requirements for consent, transparency, and risk-based management.

- FDA AI/ML Medical Device Regulation (USA): Establishes comprehensive premarket pathway through 510(k), De Novo classification, or Premarket Approval (PMA) for AI-enabled medical devices, with emphasis on predetermined change control plans and post-market surveillance.

- HIPAA/HITECH Act (USA): Mandatory protection of Protected Health Information (PHI) in AI systems with enhanced enforcement mechanisms and penalties up to $1.5M annually per violation category.

- FDA Draft Guidance on AI-Enabled Device Software Functions (January 2025): First comprehensive lifecycle management guidance covering development through post-market surveillance for medical AI systems.

- Health Canada Medical Devices Regulations: Class II, III, and IV Machine Learning-enabled Medical Devices (MLMD) requiring comprehensive regulatory approval with predetermined change control plans.

- PIPEDA (Personal Information Protection and Electronic Documents Act): Governs personal health data processing in AI systems with enhanced requirements for medical information protection.

- Pan-Canadian AI for Health (AI4H) Guiding Principles (January 2025): Federal-provincial-territorial framework for responsible AI adoption in healthcare settings.

- MHRA AI Strategy (UK): Software as Medical Device (SaMD) and AI as Medical Device (AIaMD) regulatory pathway with emphasis on safety, transparency, fairness, accountability, and contestability principles.

- MHRA Transparency Guiding Principles for MLMDs (June 2024): Human-centered design requirements, performance monitoring, and risk communication for healthcare AI systems.

- EU AI Act: highrisk use cases require risk management system, logging, transparency, and postmarket monitoring.

- ISO/IEC 42001 & 23894, NIST AI RMF: documented policies, measurable controls, continuous risk treatment.

- Sector overlays (e.g., FDA SaMD, PRA SS1/23, SR 117, DORA): model inventory, stress testing, SoD, audit trails, resilience.

- TGA Software as Medical Device (Australia): Comprehensive regulatory framework for AI-enabled medical devices with risk-based classification and post-market surveillance requirements.

- PMDA AI Guidelines (Japan): Regulatory guidance for AI/ML-based medical devices with emphasis on clinical validation and real-world performance monitoring.

- Singapore PDPA Healthcare AI: Personal data protection framework for AI systems processing health data with requirements for consent, transparency, and risk-based management.

.svg)

AI Demo Today