Solytics Partners’ Unified AI Governance and Assurance Ecosystem

A unified, industry-agnostic framework to manage AI/ML and GenAI across the entire lifecycle - validation, monitoring, observability, governance, documentation, inventory, and workflows.

Get a 360° vantage point into every AI system by ingesting signals from RAG, text-generation apps, cloud services (AWS, Azure, GCP), on-prem models, and third-party AI. Embed policy-as-code, enable human-in-the-loop, and ensure continuous assurance from design to retirement.

With built-in workflows aligned to the EU AI Act, NIST, and local regulations, you can define policy once and enforce it everywhere. Automate validation, monitoring, approvals, and evidence so every AI use case is transparent, safe, and audit ready.

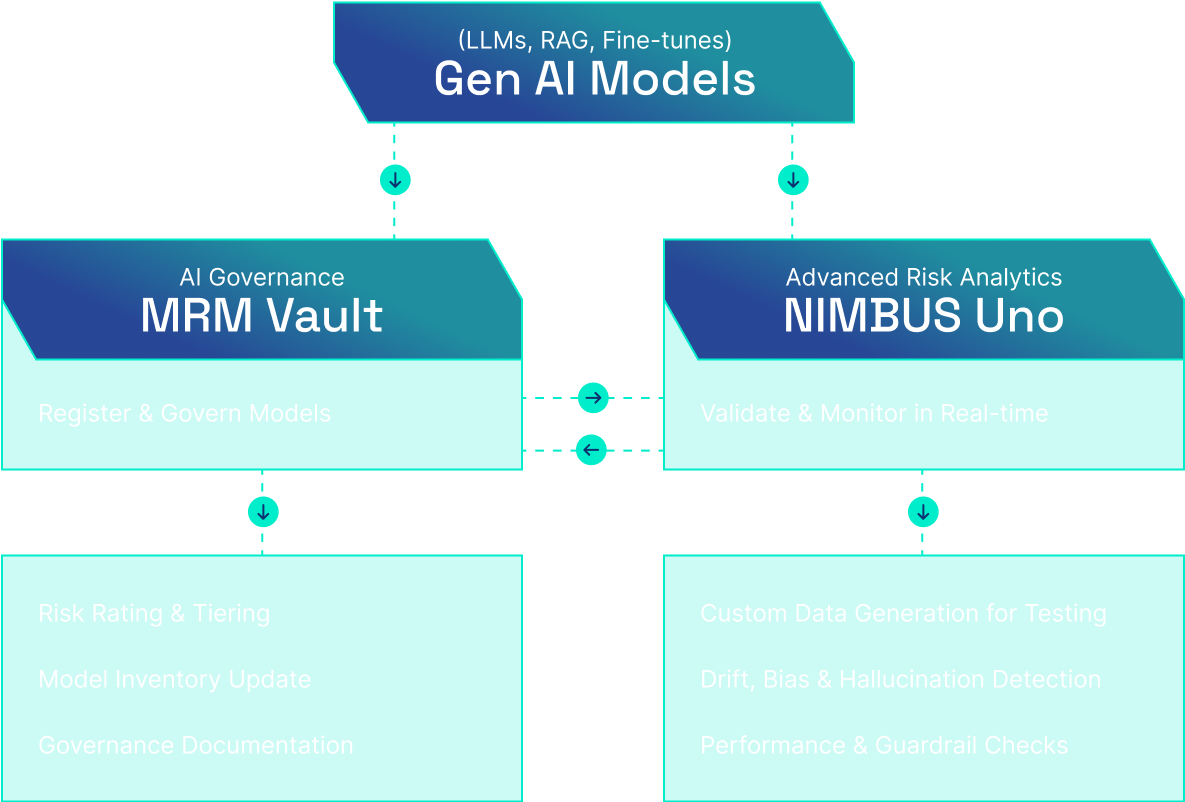

An integrated platform for the AI lifecycle

Our AI ecosystem provides a connected, well-governed approach to managing the full lifecycle of GenAI and LLMs - from adoption to retirement - addressing unique risks with an integrated suite that ensures visibility, control, and assurance at every stage.

1. Inventory & Workflow Management

- Central registry for AI/ML/LLM with owners, lineage, datasets, prompts, dependencies.

- Risk tiering (incl. EU AI Act highrisk mapping); lifecycle gates and role-based approvals.

- Connectors for bulk sync from AWS/Azure/GCP registries, CI/CD, data catalogues.

2. Governance & Policy as Code

- Control library mapped to EU AI Act, NIST AI RMF, ISO/IEC 42001/23894,

GDPR/CCPA; sector overlays (Financial Services, Health & Life Sciences, Public Sector). - Model Wrapping with runtime rate limits, output filters, redaction, safety rules - enforced across clouds and on-prem.

- Exception workflows, SoD, impact analysis on retrains/ prompt edits/ data refreshes.

3. Validation (ML and GenAI)

- Accuracy/ robustness/ stability packs; redteam/ adversarial and stress tests.

- Fairness/ bias validation across cohorts with mitigation playbooks.

- Explainability (attributions, counterfactuals) with policy thresholds; human signoffs.

4. Evaluation (LLMs and RAG)

- Accuracy/ robustness/ stability packs; red team/ adversarial and stress tests.

- Fairness/ bias across cohorts with mitigation playbooks.

- Explainability (attribution, counterfactuals) with policy thresholds + human signoffs.

5. Observability

- Trace-level logs for prompts, responses, retrievals across cloud and on-prem infrastructure

- Latency/ throughput/ tokencost telemetry with SLO/ SLA tracking.

- Embedding/ feature drift visualizations; version diffs and change logs.

6. Continuous Monitoring

- Performance and drift monitors (data, embedding, concept) with budgets and alerts.

- Fairness & safety monitors (toxicity, jailbreaks, leakage).

- Progressive rollout patterns (shadow/ canary/ bluegreen) and policybased rollbacks.

7. Documentation & Evidence

- Auto-generated model cards & fact sheets; AIAs/DPIAs; conformity reports.

- Tamper-evident audit trails for approvals, changes, incidents, retrains.

- Documentation Assistant to draft validation summaries and evidence packs.

8. Incident, Breach & Change Management

- Detection → triage → RCA → CAPA workflows with SLAs and board-ready reports.

- Versioned change governance (prompts, datasets, weights, policies) with rollbacks.

Our AI Ecosystem unifies governance, risk, and operations across the AI/ML/LLM lifecycle. It embeds compliance into workflows, enables continuous monitoring, and provides validation, observability, and safety tools - enabling organizations deploy AI responsibly, meet regulations, and drive trusted innovation.

- Policy-Embedded Workflows- Convert internal standards and external regulations into enforceable lifecycle gates from intake to retirement.

- Governance Policy-as-Code - Control library mapped to EU AI Act, NIST AI RMF, ISO/IEC 42001/23894, GDPR/CCPA, and sector overlays, with runtime enforcement via Model Wrapping (rate limits, output filters, content rules, redaction).

- Provenance Traceability - End-to-end lineage for data, prompts, embeddings, features, code, and decisions; full version history and impact analysis.

- Exception Change Management - Structured workflows for retrains, prompt edits, dataset refreshes, version control with rollbacks.

- Continuous Fairness & Bias Surveillance - Cohort-level metrics, automated alerts, and mitigation playbooks.

- Performance & Drift Monitoring - Statistical drift detection (data, embeddings, concepts), robustness, and stability checks.

- LLM/GenAI Observability - Hallucination tracking, RAG grounding scores, safety/abuse detection, latency and token cost monitoring.

- Security Posture - OWASP Top 10 protections for LLMs, including prompt injection defences, safe output handling, and data exfiltration prevention.

- Progressive Rollouts - Shadow, canary, and blue/green deployments with policy-driven rollback triggers.

- Human-Readable Rationales - Decision narratives, feature attributions, and counterfactual explanations.

- Model Cards & Fact Sheets - Auto-generated with intended use, datasets, limitations, and risk mitigations.

- Role-Based Stakeholder Views - Regulator, risk, clinical, and business perspectives with tailored access.

- Impact Assessments & Policy Reports - DPIA, AIA, and framework-aligned reports with one-click audit exports.

- Central Evidence Repository - Versioned approvals, validation findings, incidents, and corrective actions.

- Collaboration Tools - Task management, SLA tracking, and executive dashboards for board reporting.

- Central AI/ML/LLM registry with ownership, lineage, risk tiering, and version history.

- Lifecycle workflows: intake → validation → go-live → change → retirement.

- Dependency mapping and impact analysis across datasets, prompts, embeddings, features, and services.

- Bulk import & API connectors to registries, notebooks, CI/CD, and data catalogues.

- Standardized test packs for accuracy, robustness, stability, stress/adversarial testing.

- Bias and fairness validation with mitigation libraries.

- Explainability with acceptance thresholds and human-in-the-loop validation.

- LLM/RAG evaluation: hallucination/faithfulness scoring, grounding scores, safety/PII checks, benchmarking, and prompt versioning.

- Trace-level logging for prompts, responses, retrievals, and features.

- Latency, throughput, and token/cost telemetry with SLA tracking.

- Embedding and feature drift analytics, prompt/version diffs, and change logs.

- Auto-generated model cards, fact sheets, and conformance reports.

- Tamper-evident audit trails for approvals, changes, and incidents.

- Documentation assistants for validation summaries and evidence packs.

Organizations across industries are rapidly integrating Generative AI (GenAI) and Large Language Models (LLMs) to transform how they operate. These technologies are unlocking new efficiencies, enabling personalization at scale, delivering faster insights from unstructured data, and opening innovation opportunities in product design, research, and knowledge management.However, while the potential is significant, adoption is not without its challenges, which are listed below.

- “Pilot zoo” with duplicated efforts; no single usecase/model inventory.

- Unclear RACI and decision rights; approvals scattered across email/ Excel.

- Shadow AI and vendor sprawl; little evidence reuses across programs.

- Hallucinations and ungrounded answers; no systematic grounding score for RAG.

- Missing prompt governance (versioning, allow/deny patterns, secret/PII guards).

- Prompt injection/jailbreaks, context leakage, and output handling gaps.

- Latency/cost sprawl without SLOs; token usage invisible to finance/ops.

- Nonstandard validation packs; fairness not measured across cohorts; limited adversarial/robustness testing.

- Sparse observability (no tracelevel logs, weak drift detection, manual A/B, missing humanrating loops).

- Weak change governance retrains/prompt edits shipped without approvals or rollback plans.

- EU AI Act: highrisk use cases require risk management system, logging, transparency, and postmarket monitoring.

- ISO/IEC 42001 & 23894, NIST AI RMF: documented policies, measurable controls, continuous risk treatment.

- Sector overlays (e.g., FDA SaMD, PRA SS1/23, SR 117, DORA): model inventory, stress testing, SoD, audit trails, resilience.

- “Pilot zoo” with duplicated efforts; no single usecase/model inventory.

- Unclear RACI and decision rights; approvals scattered across email/ Excel.

- Shadow AI and vendor sprawl; little evidence reuses across programs.

- Hallucinations and ungrounded answers; no systematic grounding score for RAG.

- Missing prompt governance (versioning, allow/deny patterns, secret/PII guards).

- Prompt injection/jailbreaks, context leakage, and output handling gaps.

- Latency/cost sprawl without SLOs; token usage invisible to finance/ops.

- Nonstandard validation packs; fairness not measured across cohorts; limited adversarial/robustness testing.

- Sparse observability (no tracelevel logs, weak drift detection, manual A/B, missing humanrating loops).

- Weak change governance retrains/prompt edits shipped without approvals or rollback plans.

- EU AI Act: highrisk use cases require risk management system, logging, transparency, and postmarket monitoring.

- ISO/IEC 42001 & 23894, NIST AI RMF: documented policies, measurable controls, continuous risk treatment.

- Sector overlays (e.g., FDA SaMD, PRA SS1/23, SR 117, DORA): model inventory, stress testing, SoD, audit trails, resilience.

Business Benefits and Outcomes

Fast Adoption with Minimal Disruption

Pre-built governance workflows & configurable controls enable rapid deployment into existing environments, ensuring teams can operationalize AI quickly without slowing innovation.

Accelerated Innovation with Precise Control

Embed governance upstream to remove last-minute compliance fixes, streamline approvals, and cut time-to-market while maintaining full control over processes.

Lower Risk & Cost through Smart Automation

Automate manual and repetitive compliance tasks, reduce dependency on scarce resources, and minimize audit findings - driving measurable cost efficiencies.

Seamless Integration Across Ecosystems

Connect effortlessly with your existing AI, data, and IT landscapes – spanning cloud (AWS, Azure, GCP), on-prem, and third-party AI services.

Regulatory Confidence through Configurability

Produce audit-ready evidence on demand and adapt instantly to emerging mandates with easy, no-code configuration.

Trusted AI at Scale with Proven Effectiveness

Build stakeholder confidence through transparent, ethical, and accountable AI practices - delivered at speed and validated for real-world performance.

Embedded Platform DeepDives

NIMBUS Uno

- Integrated data pipeline with built-in data quality, lineage tracking, experiment management, and prompt/version control.

- GenAI observability: hallucination detection, grounding score computation for RAG, safety/PII/toxicity checks, latency and token/cost monitoring.

- CI/CD and ITSM integrations for automated revalidation, dataset refresh vetting, and evidence capture.

MRM Vault

- Global control library mapped to EU AI Act, NIST AI RMF, I SO/IEC 42001/23894, GDPR/CCPA, plus sector-specific overlays (finance, healthcare, public sector).

- 360° AI inventory with automated risk classification, templated compliance reviews, role-based sign-offs, and tamper-evident audit trails.

- Runtime model wrapping for rate limits, output filtering, content rules, and data redaction.

MoDeVa

MoDeVa

- Standardized test packs or accuracy, robustness, stability, stress, and adversarial testing.

- Fairness & bias validation across cohorts with automated mitigation playbooks.

- LLM/RAG evaluation tools - for hallucination scoring, faithfulness testing, grounding metrics, & safety/PII compliance.

- Integrated human-in-the-loop workflows for qualitative evaluation & prompt/test set versioning.

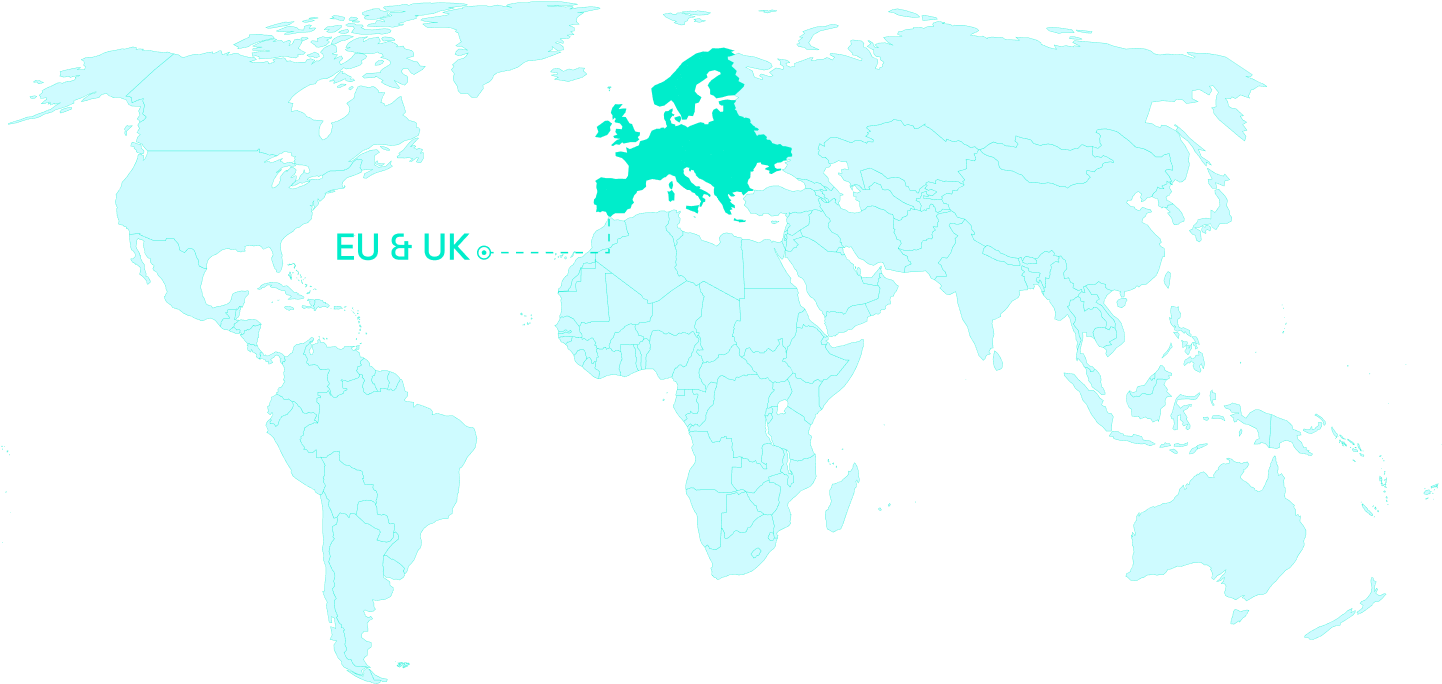

Why AI Governance Matters

Financial institutions face increasing regulatory scrutiny, operational and reputational risk when deploying AI. Making comprehensive and multidisciplinary governance is essential to ensure transparency, accountability, and trust in AI use-case adoption.

EU & UK

- EU AI Act (EU)

- PRA SS1/23 (UK)

- General Data Protection Regulation & Data Protection Act (EU)

- DORA (EU)

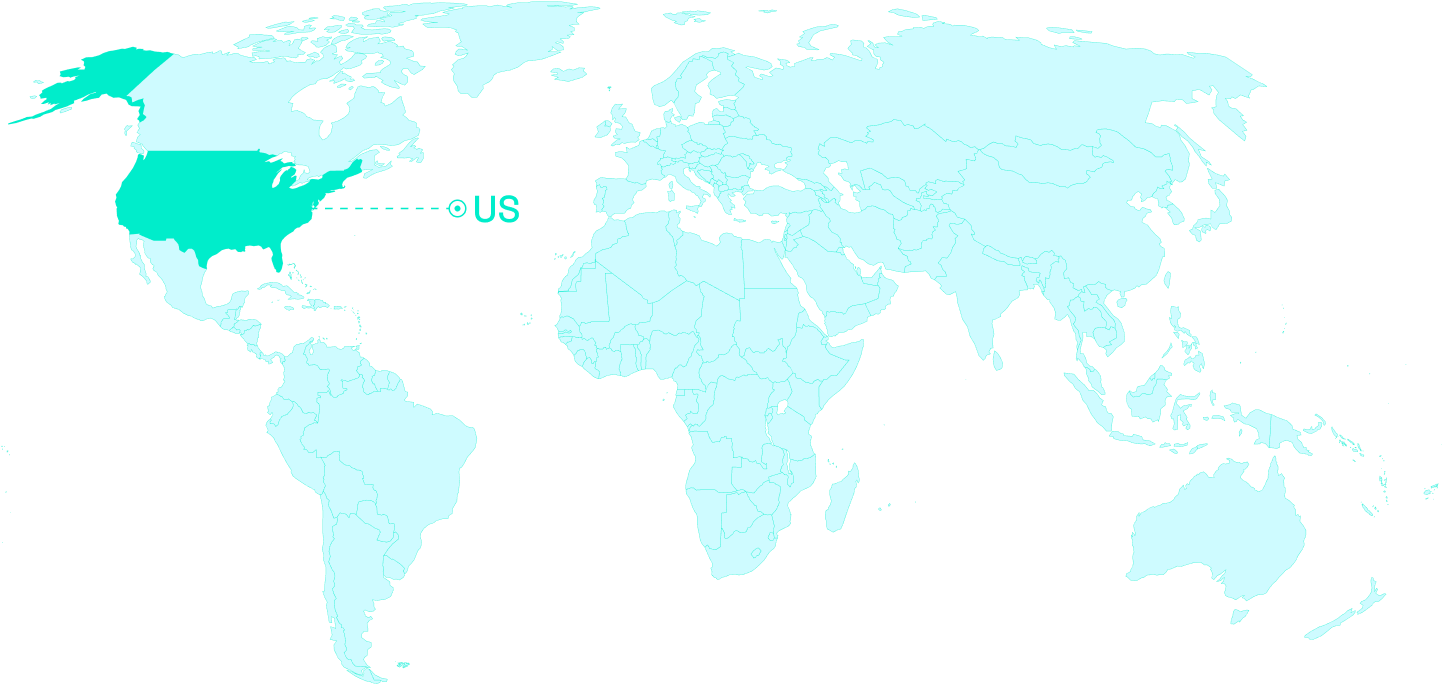

US

- California AI Act (USA)

- NIST AI RMF (USA)

- NAIC AI Guidelines (USA)

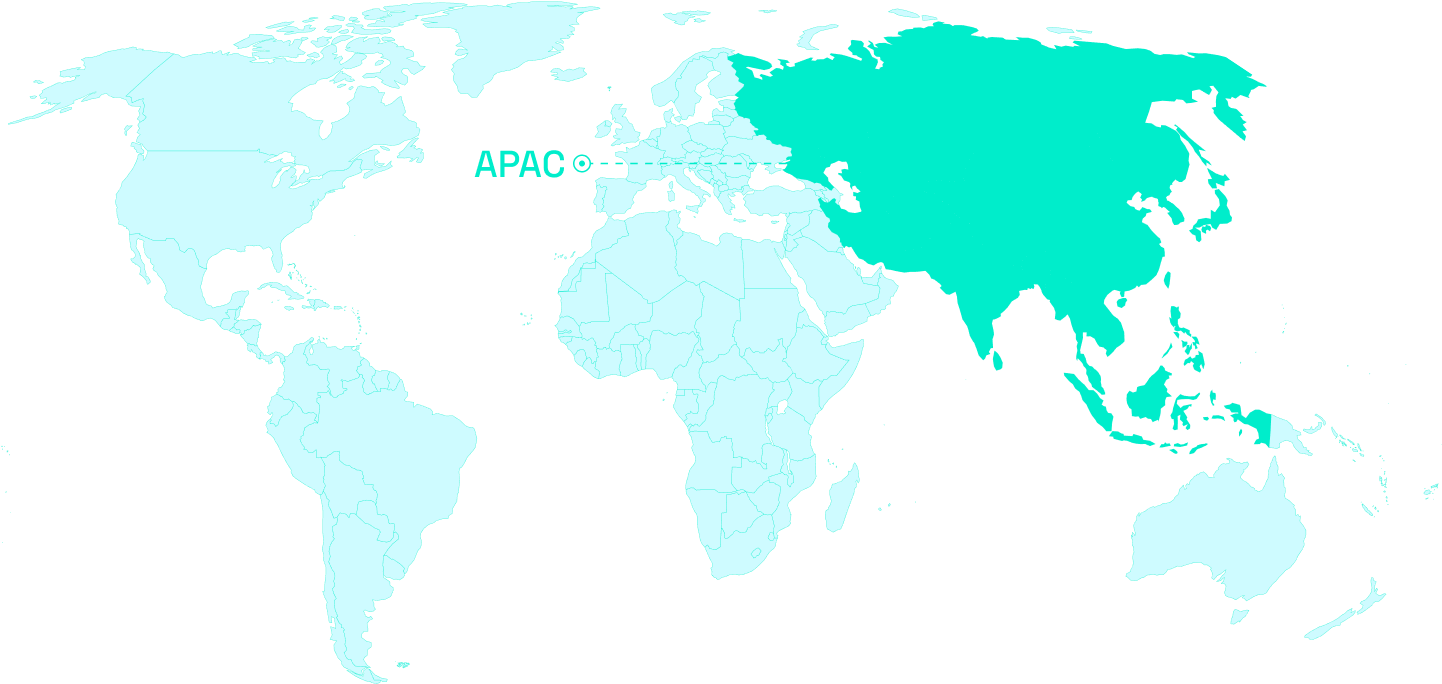

APAC

- OWASP Top 10 LLM (USA)

- APRA CPS 230 (Australia)

- China Cybersecurity Law

- Hong Kong PDPO

- Singapore PDPA

MENA

- CBUAE Mandates: Consumer Protection Regulation (UAE)

Schedule a demo and see how our AI Ecosystem can help your organization govern AI responsibly, satisfy regulators, and drive innovation at enterprise scale.

.svg)

.svg)